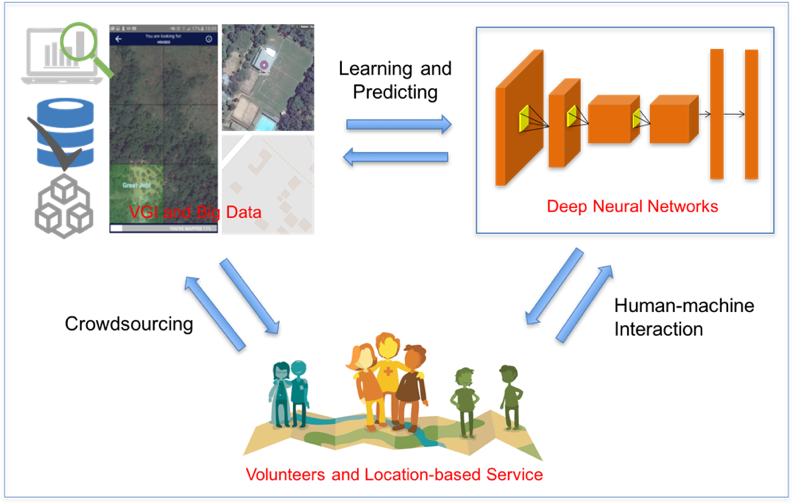

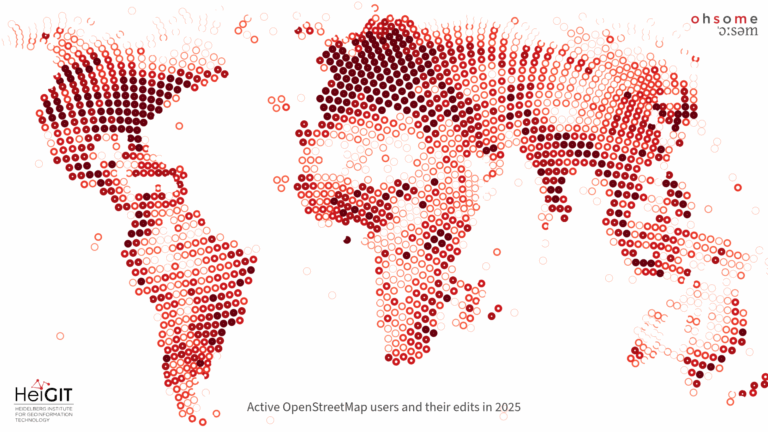

Satellite images are widely applied in humanitarian mapping which labels buildings, roads and so on for humanitarian aid and economic development. However, the labeling now is mostly done by volunteers. In a recently accepted study, we utilize deep learning to solve humanitarian mapping tasks of a mobile software named MapSwipe. The current deep learning techniques e.g., Convolutional Neural Network (CNN) can recognize ground objects from satellite images, but rely on numerous labels for training for each specific task. We solve this problem by fusing multiple freely accessible crowdsourced geographic data, and propose an active learning-based CNN training framework named MC-CNN to deal with the quality issues of the labels extracted from these data, including incompleteness (e.g., some kinds of object are not labeled) and heterogeneity (e.g., different spatial granularities). The method is evaluated with building mapping in South Malawi and road mapping in Guinea with level-18 satellite images provided by Bing Map and volunteered geographic information (VGI) from OpenStreetMap, MapSwipe and OsmAnd.