Crowdsourced street-level imagery can be used to detect and map humanitarian-relevant features in near real time. We have developed a machine-learning-based analytical pipeline that integrates with the open-source imagery catalogue Panoramax to support a more flexible disaster response and urban monitoring, especially in underserved regions.

This blog article was originally posted on Medium by Maciej Adamiak, machine learning expert at HeiGIT.

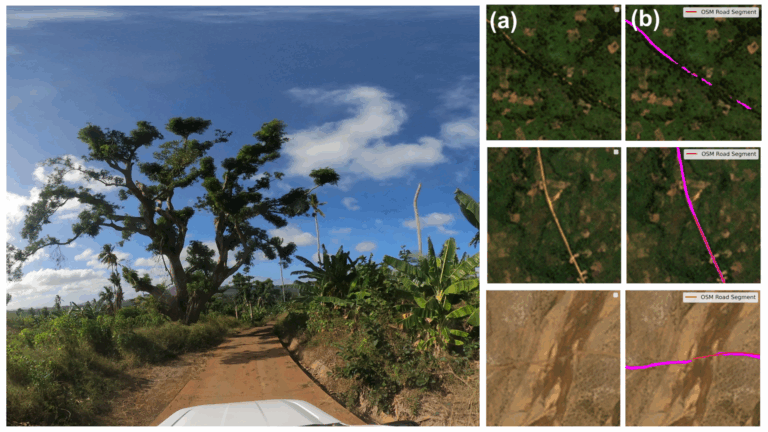

Remote sensing gives us multiple ways to look at the world. Satellites offer great coverage — you can capture huge areas in one shot and spot patterns that you’d never notice from the ground. UAV flights bring us significantly closer, delivering resolution sharp enough to distinguish individual objects — trees, vehicles, buildings — and revealing spatial relationships that satellites can only hint at. Each platform operates at its optimal altitude, capturing the data it’s best suited for, while both maintain the same viewing angle.

While their downward perspective is powerful for understanding landscapes and monitoring change over time, it misses an entire human-scale dimension of detail that becomes visible only when you shift to a horizontal view at ground level. That’s where street-level imagery comes in, capturing the textures, signage, infrastructure conditions, and features that define how people actually experience and navigate our environment.

Until recently, access to spherical imagery was dominated by Google Street View. Street View remains exceptional in quality, but its strengths come with tradeoffs: limited API accessibility, infrequent update cycles, and restricted coverage in developing regions where humanitarian needs are greatest. The data collection model — requiring specialised high-resolution camera systems — makes rapid updates challenging.

Crowdsourced platforms are changing this landscape dramatically. Mapillaryalone provides direct access to over 2 billion images, while Panoramaxcontributes 75 million more. These platforms accept uploads from any camera — smartphones, dashcams, action cameras — making data collection accessible to local communities, aid workers, and volunteers. Corporate mapping schedules no longer constrain update frequency. After a disaster, contributors can document conditions within hours, providing fresh intelligence when it matters most.

The accessibility extends beyond the collection. Most crowdsourced platforms offer open APIs, on-premise deployment options, and permissive licensing, enabling researchers and humanitarian organisations to build custom computer vision pipelines without negotiating enterprise agreements. You can download imagery, train models, and deploy applications that serve specific operational needs. It is in this area that the HeiGIT team feels at its best!

During our institute’s innovation summer — a time dedicated to tackling mind-boggling challenges — together with Oliver Fritz, Levi Szamek, Michael Auer, and Marcel Maurer — we developed an idea for a crowdsourced street-view imagery analytical pipeline. In this blog post, we will present our concept.

Short note on Panoramax

Panoramax represents a significant shift in how we access street-level imagery. Unlike proprietary platforms, it’s fully open source with a federated architecture — anyone can host an instance, and all data remains accessible through a unified meta-catalogue at https://api.panoramax.xyz/. Moreover, the platform emphasises privacy-by-design with automated face and license plate blurring, and offers complete control over data storage and access policies. Panoramax GitLab is a great place to acquire info on the whole ecosystem and even deploy it locally using docker-compose.

Street-level imagery to actionable data

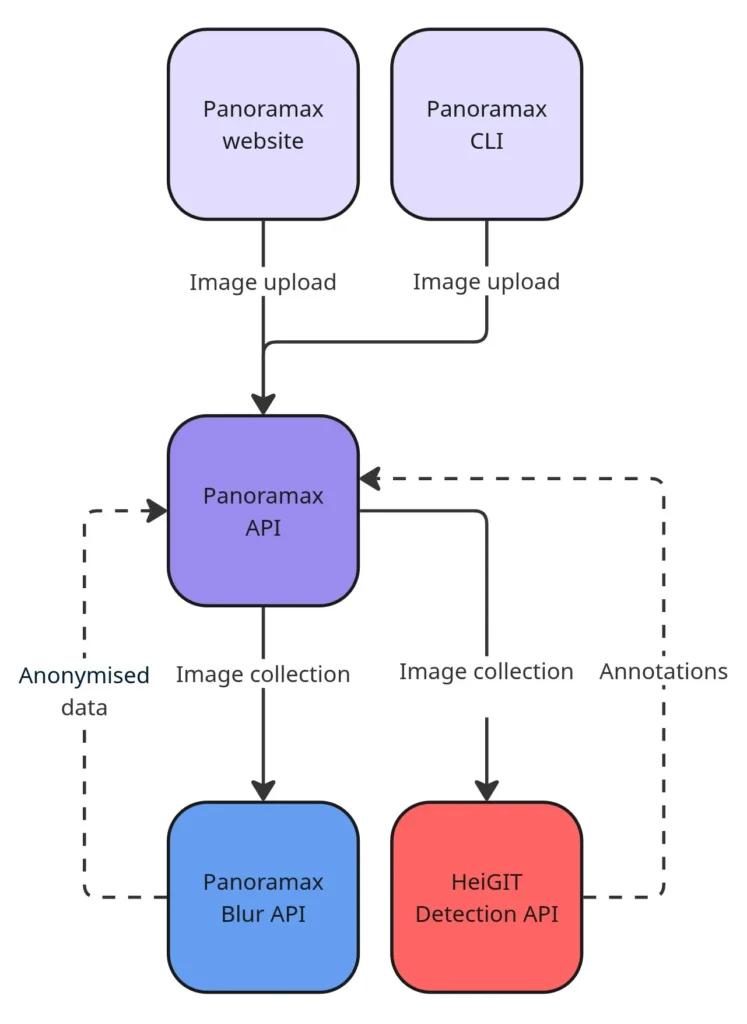

During innovation summer, we developed and implemented a technical architecture that integrates seamlessly with Panoramax’s existing workflow.

The concept is straightforward: when contributors upload images, our service automatically extracts humanitarian-relevant features and generates structured and georeferenced annotations. The annotations are then pumped into the Panoramax instance, which is currently ready to display them correctly along with all relevant attributes.

The service itself is model-agnostic, allowing the deployment of different deep learning architectures based on the task, whether by directly downloading weights from HuggingFace or by constructing a model from locally stored data.

We tested three complementary approaches:

Classification models identify image-level characteristics like waste accumulation or road surface conditions. These tools work well for broad area assessment and are enormously helpful for quickly assessing the „topic“ of the image.

Object detection models locate specific features with bounding boxes — traffic signs, vehicles, infrastructure elements. This tool is excellent for building an overview of topographic objects present in the analysed area.

Instance segmentation models provide pixel-level precision for complex scenes, distinguishing individual trees, buildings, road surfaces, and pedestrians.

Our service in action, integrated with Panormax, from left: waste and pothole classification, traffic sign detection, instance segmentation with geocords estimation; image source: OpenMap Development Tanzania (OMDTZ)

What’s more, we didn’t stop and mask delination but tried out a neat approach to estimating instances‘ geographical coordinates. Now, we not only know where each object is in the spherical imagery scene, but also where it’s really located on a map. Imagine the possibilities when working with multimodal approaches that combine satellite and street-level data.

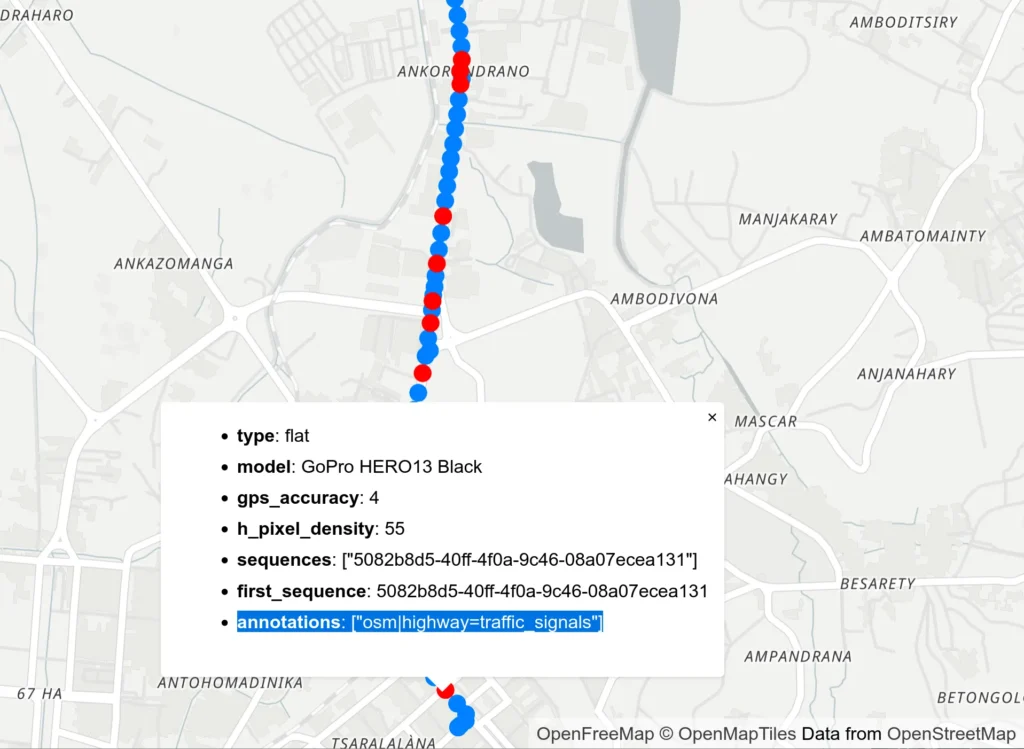

Through the AILAS project, HeiGIT collaborated with the Malagasy Red Cross to support their field operations. Two field teams equipped with cameras have captured approximately 20,000 images (so far!) across urban and rural areas in Madagascar. As their image collection continues, our detection methods could potentially support similar Red Cross initiatives in the future.

The imagery flowed through our detection pipeline, generating thousands of automatically detected features covering humanitarian-relevant categories: infrastructure conditions, accessibility features, potential hazards, and critical facilities.

The results appear in multiple formats: as annotations viewable in the Panoramax interface, as vector tiles for GIS analysis, and as structured data accessible through the API. This flexibility enables different workflows depending on operational needs.

Here you can find a code snippet that enables running an object model detection. The crucial part is to prepare the container holding all annotation data properly:

import uuid

from typing import Generator

from typing import Optional

from attr import dataclass

from shapely import Point

from shapely import Polygon

from transformers import AutoImageProcessor

from transformers import AutoModelForObjectDetection

@dataclass

class Annotation:

image_id: str

collection_id: str

key: str

label: str

score: float

model_ref: str

xy: Optional[Point]

service_ref: str

geometry: Optional[Polygon] = None

@dataclass

class PanoramaxImage:

id: str

collection_id: str

arr: np.ndarray

coordinates: Point

azimuth: Optional[float]

def object_detection(

self,

image: PanoramaxImage,

model_ref: str = 'facebook/detr-resnet-50',

threshold: float = 0.5,

) -> Generator[Annotation, None, None]:

processor = AutoImageProcessor.from_pretrained(model_ref, revision='no_timm')

model = AutoModelForObjectDetection.from_pretrained(model_ref, revision='no_timm')

inputs = processor(images=image.arr, return_tensors='pt')

outputs = model(**inputs)

results = processor.post_process_object_detection(

outputs,

target_sizes=torch.tensor([image.arr.shape[:2]]),

threshold=threshold,

)[0]

for score, label, box in zip(results['scores'], results['labels'], results['boxes']):

box = box.int().tolist()

yield Annotation(

image_id=image.id,

collection_id=image.collection_id,

key=f'{uuid.uuid4()}',

label=model.config.id2label[label.item()],

score=score.item(),

geometry=shapely.box(*box),

model_ref=model_ref,

service_ref=self.detection_key,

xy=None,

)Next steps

Innovation summer proved the concept works! Now we would like to engage with the Panoramax community about production deployment and seeking humanitarian partners with operational use cases.

The potential applications span the disaster management cycle: preparedness mapping, rapid damage assessment, recovery monitoring, and development planning. Each context may require different detection models, but the underlying infrastructure remains consistent.

If you are working on applications for humanitarian response or have a use case where crowdsourced geospatial intelligence could make a difference, we would love to hear about it! Our team collaborates with NGOs and humanitarian organizations to develop and deploy these technologies in operational contexts. Reach out at humanitarian_gi@heigit.org to discuss potential collaborations or technical implementations.