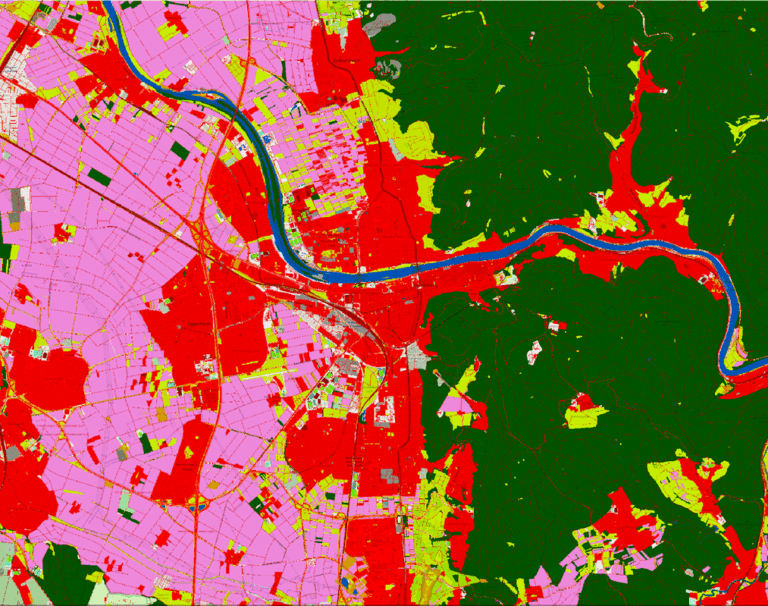

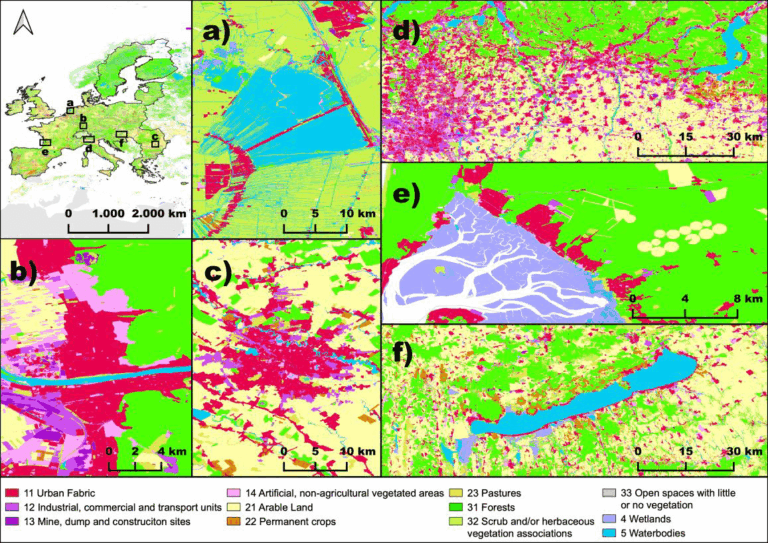

After more than a decade of rapid development of volunteered geographic information (VGI), VGI has already become one of the most important research topics in the GIScience community. Almost in the meantime, we have witnessed the ever-fast growth of geospatial deep learning technologies to develop smart GIServices and to address remote sensing tasks, for instance, land use/land cover classification, object detection, and change detection. Nevertheless, the lack of abundant training samples as well as accurate semantic information has been long identified as a modeling bottleneck of such data-hungry deep learning applications. Correspondingly, OpenStreetMap (OSM) shows great potential in tackling this bottleneck challenge by providing massive and freely accessible geospatial training samples. More importantly, OSM has exclusive access to its full historical data, which could be further analyzed and employed to provide intrinsic data quality measurements of the training samples.

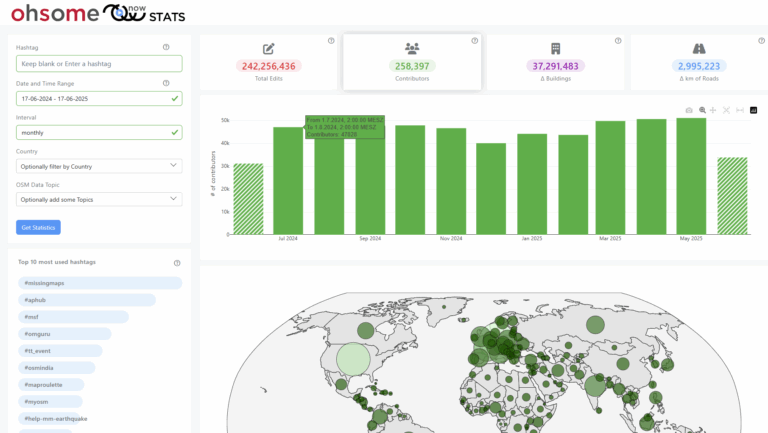

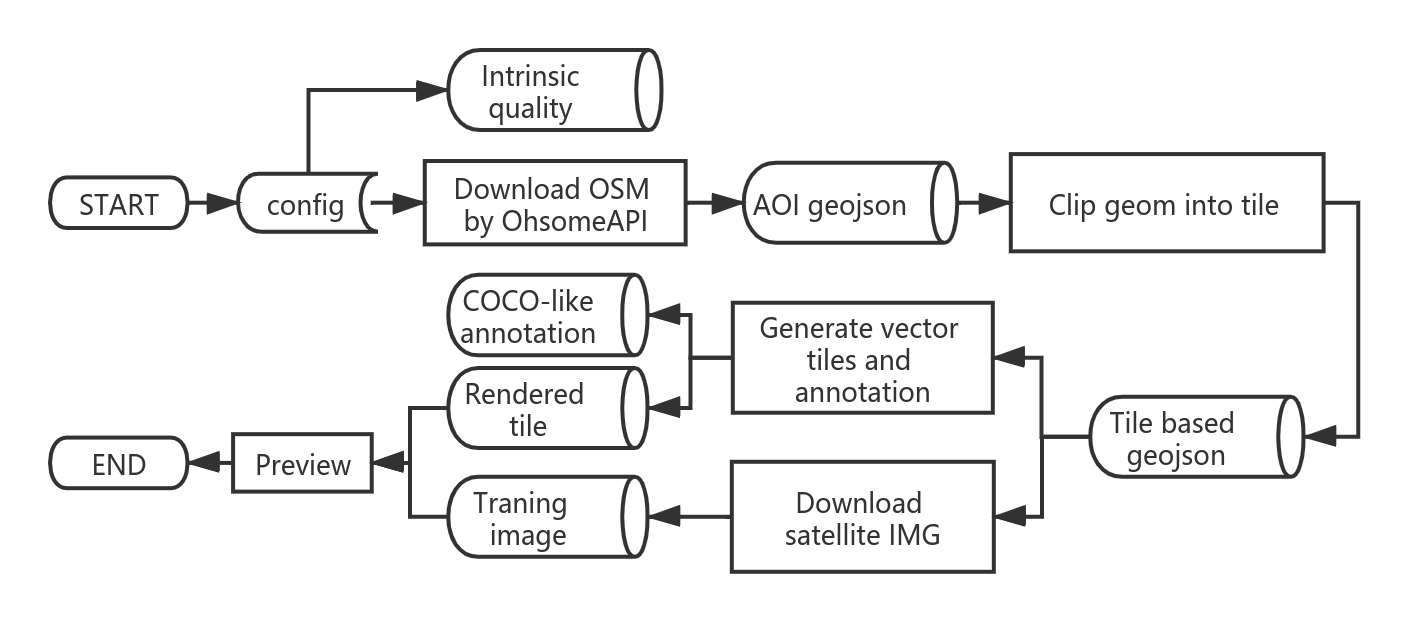

Recently, we open-sourced our ohsome2label tool, which offers a flexible framework for labeling customized geospatial objects using historical OSM data that allows more effective and efficient deep learning. Based on the ohsome API developed by HeiGIT gGmbH, ohsome2label aims to mitigate the lack of abundant high-quality training samples in geospatial deep learning by automatically extracting customized OSM historical features, and providing intrinsic OSM data quality measurements.

Package description flowchart of ohsome2label.

Package description flowchart of ohsome2label.

The aim of this tool is to promote the application of geospatial deep learning by generating and assessing OSM training samples of user-specified objects, which not only allows user to train geospatial detection models but also introduces the intrinsic quality assessment into the “black box” of the training of deep learning models. With such deeper understanding of training samples quality, future efforts are needed towards more understandable and geographical-aware deep learning models.

Follow our further activities in GitHub, and stay tuned for more ohsome news: https://github.com/GIScience/ohsome2label

You are also invited to read our conference paper in proceeding of the Academic Track at the State of the Map 2020:

Wu, Zhaoyan, Li, Hao, & Zipf, Alexander. (2020). From Historical OpenStreetMap data to customized training samples for geospatial machine learning. In proceedings of the Academic Track at the State of the Map 2020 Online Conference, July 4-5 2020. DOI: http://doi.org/10.5281/zenodo.3923040“

Find more information about ohsome API:

The ohsome OpenStreetMap History Data Analytics Platform makes OpenStreetMap’s full-history data more easily accessible for various kinds of OSM data analytics tasks, such as data quality analysis, on a global scale. The ohsome API is one of its components, providing free and easy access to some of the functionalities of the ohsome platform via HTTP requests. More information can be found here:

- ohsome general idea

- ohsome general architecture

- the whole “how to become ohsome” tutorials series

- Li, H., Herfort, B., Huang, W., Zia, M. and Zipf, A. (2020): Exploration of OpenStreetMap Missing Built-up Areas using Twitter Hierarchical Clustering and Deep Learning in Mozambique. ISPRS Journal of Photogrammetry and Remote Sensing. https://doi.org/10.1016/j.isprsjprs.2020.05.007

- Herfort, B., Li, H., Fendrich, S., Lautenbach, S., Zipf, A. (2019): Mapping Human Settlements with Higher Accuracy and Less Volunteer Efforts by Combining Crowdsourcing and Deep Learning. Remote Sensing 11(15), 1799. https://doi.org/10.3390/rs11151799

- Li, H., Herfort, B., Zipf, A. (2019): Estimating OpenStreetMap Missing Built-up Areas using Pre-trained Deep Neural Networks. 22nd AGILE Conference on Geographic Information Science, Limassol, Cyprus.

- Chen, J., Y. Zhou, A. Zipf and H. Fan (2018): Deep Learning from Multiple Crowds: A Case Study of Humanitarian Mapping. IEEE Transactions on Geoscience and Remote Sensing (TGRS). 1-10. https://doi.org/10.1109/TGRS.2018.2868748

- Li, H.; Ghamisi, P.; Rasti, B.; Wu, Z.; Shapiro, A.; Schultz, M.; Zipf, A. (2020) A Multi-Sensor Fusion Framework Based on Coupled Residual Convolutional Neural Networks. Remote Sensing. 12, 2067. https://doi.org/10.3390/rs12122067

- Schultz, M., Voss, J., Auer, M., Carter, S., and Zipf, A. (2017): Open land cover from OpenStreetMap and remote sensing. International Journal of Applied Earth Observation and Geoinformation, 63, pp. 206-213. DOI: 10.1016/j.jag.2017.07.014 see also: http://osmlanduse.org