We specialize in converting complex open geo-datasets into actionable insights, with a particular emphasis on humanitarian and climate-related applications. Drawing from our experience in research and development, we bridge the gap between technology and real-world applications. Our custom-built tools and processes, incorporating advanced methods from spatial data mining and deep learning, are designed to meet specific data quality and enrichment needs of our partners.

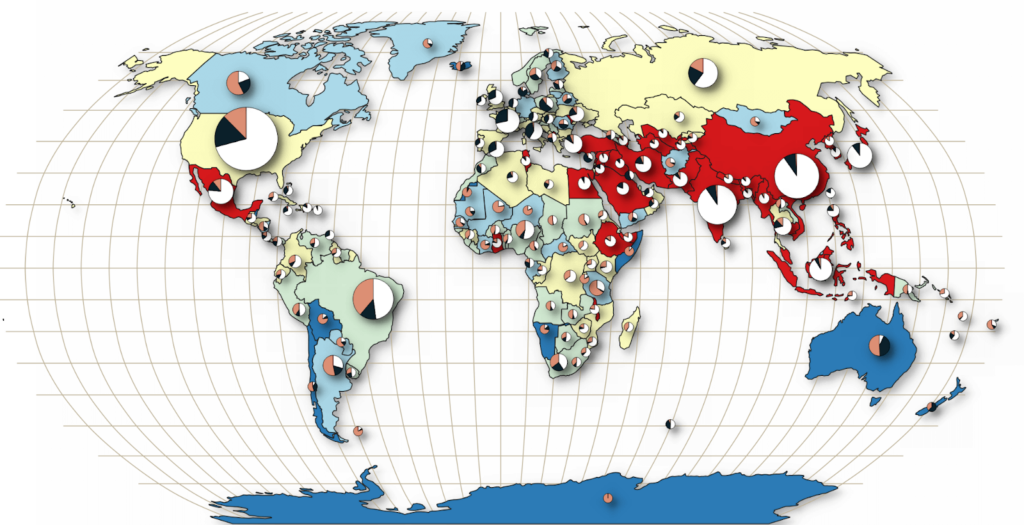

Tools to measure completeness, correctness, and thematic accuracy of OpenStreetMap (OSM) data globally and in near real-time.

Enriched OSM data in analysis-ready formats, tailored for data scientists.

Collaborations with public sector, international organizations & research institutions to enhance methods and maintain high-quality standards.

OpenStreetMap is a vast source for user generated free geodata for various use cases. However, due to the lack of standardized data generation methods and varying quality requirements, users may face challenges. We address this by developing software and services with which OSM data quality indicators can be calculated globally or for specific areas, helping users assess whether the data meets their project needs.

The ohsome dashboard enables users to analyze OSM’s full-history data without programming skills. It generates accurate statistics and plots them directly in the dashboard, allowing for customizable filtering and grouping of tags and types across any region or time period. The ohsome quality API (OQAPI) provides access to OSM data quality information for specific regions and use-cases which might benefit i.e. humanitarian organizations and public administration.

Beyond software development, we focus on enriching OSM datasets that are stored in a data lake accessible to users. Due to OSM’s crowdsourced nature, the data often varies in quality, posing challenges for researchers, especially those working with large datasets.

We use these technologies to add missing attributes, making the data ready-for-use.

We provide collaboration partners with data that’s complete, formatted, and enriched with attributes, depending on the project’s needs.

Access to high-quality datasets reduces preparation time and increases the accuracy of machine learning models.

Road Surface Type offers a global dataset with 105 million images from Mapillary, categorizing roads as either paved or unpaved. Processed with AI techniques, it supports applications in economic development, environmental sustainability, route planning, and emergency response. It integrates with OSM, it enhances road attributes and offers insights into surface variations.

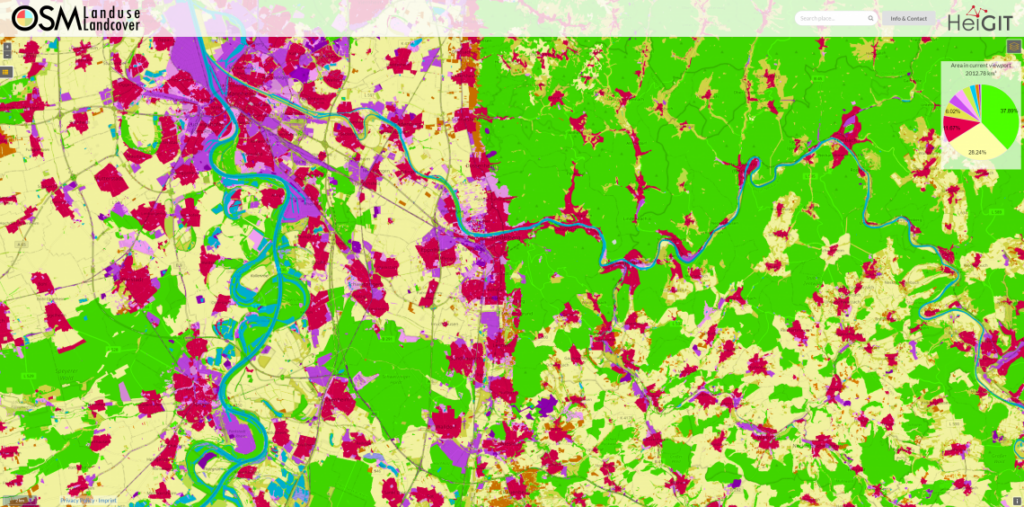

The OSM Land Use Land Cover Map web application offers a view of land use patterns globally, utilizing OSM data. This service visualizes land use classifications by integrating detailed maps with various land use categories, such as residential, commercial, and industrial areas for a single point in time. The application allows zooming for detailed views, making it a valuable tool for analyzing land use dynamics.

Our services and software help users analyze OSM data and its changes over time. They are primarily used by the OSM community, humanitarian organizations, and researchers seeking to gain insights from evolving geospatial data.

The ohsomeNow Stats dashboard provides near real-time, global statistics on OSM mapping activity, including metrics like contributor count, map edits, added buildings, and road length. Data is updated in real time, with users able to filter by OSM Changeset hashtags and access statistics from April 21, 2009. This tool was developed in collaboration with the Humanitarian OpenStreetMap Team (HOT).

The enhanced ohsomeNow Stats dashboard now provides near real-time access…

This project explores how OpenStreetMap data can complement satellite imagery…

The paper, published in Nature Scientific Data, presents the first…

We are excited to share the release of the ohsome-planet…

The concept of a “Digital Earth” has long envisioned a…